As a result of a discussions that took place during the event The Biosphere Code in Stockholm on 4th October 2015, Stockholm University researcher Victor Galaz and colleagues outlined a manifesto for algorithms in the environment.

The precepts for an in-progress Biosphere Code Manifesto are a recommendation for using algorithms borne out of growing awareness that they so deeply permeate our technology "they consistently and subtly shape human behavior and our influence on the world's landscapes, oceans, air, and ecosystems" as The Guardian wrote in an extensive article.

We are just starting to understand the effects that algorithms have on our lives. But their environmental impact may be even greater, demanding public scrutiny. Here the Biosphere Code Manifesto v1.0, with its seven principles.

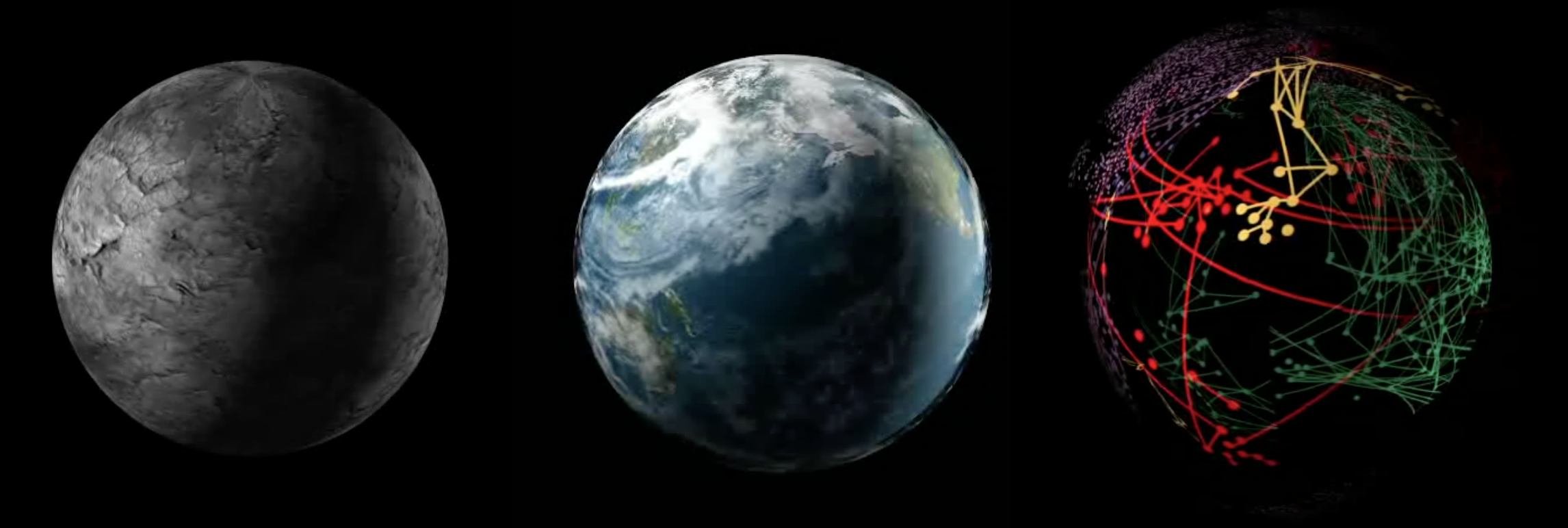

Algorithms are transforming the world around us. They come in many shapes and forms, and soon they will permeate all spheres of technology, ranging from the technical infrastructure of financial markets to wearable and embedded technologies. One often overlooked point, however, is that algorithms are also shaping the biosphere – the thin complex layer of life on our planet on which human survival and development depend. Algorithms underpin the global technological infrastructure that extracts and develops natural resources such as minerals, food, fossil fuels and living marine resources. They facilitate global trade flows with commodities and they form the basis of environmental monitoring technologies. Last but not least, algorithms embedded in devices and services affect our behavior - what we buy and consume and how we travel, with indirect but potentially strong effects on the biosphere. As a result, algorithms deserve more scrutiny.

It is therefore high time that we explore and critically discuss the ways by which the algorithmic revolution – driven by applications such as artificial intelligence, machine learning, logistics, remote sensing, and risk modelling – is transforming the biosphere, and in the long term, the Earth’s capacity to support human survival and development.

Below we list seven Principles that we believe are central to guiding the current and future development of algorithms in existing and rapidly evolving technologies, such as block chains, robotics and 3D printing. These Principles are intended as food for thought and debate. They are aimed at software developers, data scientists, system architects, computer scientists, sustainability experts, artists, designers, managers, regulators, policy makers, and the general public participating in the algorithmic revolution.

Principle 1. With great algorithmic powers come great responsibilities

Those involved with algorithms, such as software developers, data scientists, system architects, managers, regulators, and policymakers, should reflect over the impacts of their algorithms on the biosphere and take explicit responsibility for them now and in the future.

Algorithms increasingly underpin a broad set of activities that are changing the planet Earth and its ecosystems, such as consumption behaviors, agriculture and aquaculture, forestry, mining and transportation on land and in the sea, industry manufacturing and chemical pollution. Some impacts may be indirect and become visible only after considerable time. This leads to limited predictability in the early and even later stages of algorithmic development. However, in cases where algorithmic development is predicted to have detrimental impacts on ecosystems, biodiversity or other important biosphere processes on a larger scale, or as detrimental impacts do become clear over time, those responsible for the algorithms should take remedial action. Only in this way will algorithms fulfill their potential to shape our future on the planet in a sustainable way.

Principle 2. Algorithms should serve humanity and the biosphere at large.

The power of algorithms can be used for both good and bad. At the moment there is a risk of wasting resources on solving unimportant problems serving the few, thereby undermining the Earth system’s ability to support human development, innovation and well-being.

Algorithms should be considerate of the biosphere and facilitate transformations towards sustainability. They can help us better monitor and respond to environmental changes. They can support communities across the globe in their attempts to re-green urban spaces. In short, algorithms should encourage what we call ecologically responsible innovation. Such innovation improves human life without degrading the life-supporting ecosystems - and preferably even strengthening ecosystems - on which we all ultimately depend.

Principle 3. The benefits and risks of algorithms should be distributed fairly

It is imperative for algorithm developers to consider more seriously issues related to the distribution of risks and opportunities. Technological change is known to affect social groups in different ways. In the worst case, the algorithmic revolution may perpetuate and intensify pressures on poor communities, increase gender inequalities or strengthen racial and ethnic biases. This also include the distribution of environmental risks, such as chemical hazards, or loss of ecosystem services, such as food security and clean water.

We recognize that these negative distributional effects can be unintentional and emerge unexpectedly. Shared algorithms can cause conformist herding behavior, producing systemic risk. These are risks that often affect people who are not beneficiaries of the algorithm. The central point is that developing algorithms that provide benefits to the few and present risks to the many are both unjust and unfair. The balance between expected utility and ruin probability should be recognized and understood.

For example, some of the tools within finance, such as derivative models and high speed trading, have been called "weapons of mass destruction" by Warren Buffet. These same tools, however, could be remade and engineered with different values and instead used as “weapons of mass construction". Projects and ideas such as Artificial Intelligence for Development, Question Box, weADAPT, Robin Hood Coop and others show the role of algorithms in reducing social vulnerability and accounting for issues of justice and equity.

Principle 4. Algorithms should be flexible, adaptive and context-aware

Algorithms that shape the biosphere should be created in such a way that they can be reprogrammed if serious repercussions or unexpected results emerge. This applies both to accessibility for humans to alter the algorithm in case of emergency and to the algorithm’s ability to alter its own behavior if needed.

Neural network machine learning algorithms can and do misbehave - misclassifying images with offensive results, assigning people with the “wrong” names low credit ratings - without any transparency as to why. Other systems are designed for a context that may change while the algorithm stays fixed. We cannot predict the future, but we often design and implement algorithms as if we could. Still, algorithms can be designed to observe and adapt to changes in resources they affect and to the context in which they operate to minimize the risk of harm.

Algorithms should be open, malleable and easy to maintain. They should allow for the implementation of new creative solutions to urgent emerging challenges. Algorithms should be designed to be transparent with regards to operation and results in order to make errors and anomalies evident as early as possible and to make them possible to fix. Also, when possible, the algorithm should be made context-aware and able to adapt to unforeseen results while alerting society about these results.

Principle 5. Algorithms should help us expect the unexpected

Global environmental and technological change are likely to create a turbulent future. Algorithms should therefore be used in such a way that they enhance our shared capacity to deal with climatic, ecological, market and conflict-related shocks and surprises. This also includes problems caused by errors or misbehaviors in other algorithms - they are also part of the total environment and often unpredictable.

This is of particular concern when developing self-learning algorithms that can learn from and make predictions on data. Such algorithms should not only be designed to enhance efficiency (e.g., maximising biomass production in forestry, agriculture and fisheries). they should also encourage resilience to unexpected events and ensure a sustainable supply of the essential ecosystem services on which humanity depends. Known approaches to achieve this are diversity, redundancy and modularity as well as maintaining a critical perspective and avoiding over-reliance on algorithms.

Also, algorithms should not be allowed to fail quietly. For example, the hole in the ozone layer was overlooked for almost a decade before it was discovered in the mid-1980s. The extremely low ozone concentrations recorded by the monitoring satellites were being treated as outliers by the algorithms and therefore discarded, which delayed our response by a decade to one of the most serious environmental crises in human history. Diversity and redundancy helped discover the error.

Principle 6. Algorithmic data collection should be open and meaningful

Algorithms, especially those impacting the biosphere or personal privacy, should be open source. The general public should be made aware of which data are collected, when they are collected, how they will be used and by whom. As often as possible, the datasets should be made available to the public - keeping in mind the risks of invading personal privacy, and made available in such a fashion that others can easily search, download and use them. In order to be meaningful and to avoid hidden biases, the datasets upon which algorithms are trained should be validated.

With a wide range of sensors, big data analytics, blockchain technologies, and peer-to-peer and machine-to-machine micropayments, we have the potential to not only make much more efficient use of the Earth’s resources, but we also have the means to develop innovative value creation systems. Algorithms enable us to achieve this potential; however, at the same time, they also entail great risks to personal privacy, even to those individuals who opt out of the datasets. Without a system built on trust in data collection, fundamental components of human relationships may be jeopardized.

Principle 7. Algorithms should be inspiring, playful and beautiful

Algorithms have been a part of artistic creativity since ancient times. In interaction with artists, self-imposed formal methods have shaped the aesthetic result in all genres of art. For example, when composing a fugue or writing a sonnet, you follow a set of procedures, integrated with aesthetic choices. More recently, artists and researchers have developed algorithmic techniques to generate artistic materials such as paintings and music, and algorithms that emulate human artistic creative processes. Algorithmically generated art allows us to perceive and appreciate the inherent beauty of computation and allow us to create art of otherwise unattainable complexity. Algorithms can also be used to mediate creative collaborations between humans.

But algorithms also have aesthetic qualities in and of themselves, closely related to the elegance of a mathematical proof or a solution to a problem. Furthermore, algorithms and algorithmic art based on nature have the potential to inspire, educate and create an understanding of natural processes.

We should not be afraid to involve algorithmic processes in artistic creativity and to enhance human creative capacity and playfulness, nor to open up for new modes of creativity and new kinds of art. Algorithms should be used creatively and aesthetically to visualize and allow interaction with natural processes in order to renew the ways we experience and understand with nature and to inspire people to consider the wellbeing of future generations.

Aesthetic qualities of algorithms applied in society, for example, in finance, governance and resource allocation, should be unveiled to make people engaged and aware of the underlying processes. We should create algorithms that encourage and facilitate human collaboration, interaction and engagement - with each other, with society, and with nature.

The principles presented here are the result of discussions that took place at the event The Biosphere Code in Stockholm October 4th, 2015. Contributors include (in alphabetical order) Maja Brisvall (Quantified Planet), Palle Dahlstedt (University of Gothenburg, Aalborg University), Victor Galaz (Stockholm Resilience Centre, Stockholm University), Daniel Hassan (Robin Hood Minor Asset Management Cooperative), Koert van Mensvoort (Next Nature Network, Eindhoven University of Technology), Andrew Merrie (Stockholm Resilience Centre, Stockholm University), Fredrik Moberg (Albaeco/ Stockholm Resilience Centre, Stockholm University), Anders Sandberg (University of Oxford), Peter Svensson (Evothings.com), Ann-Sofie Sydow (The Game Assembly), Robin Teigland (Stockholm School of Economics), and Fernanda Torre (Stockholm Resilience Centre).

Share your thoughts and join the technology debate!

Be the first to comment